About

I am a Master’s student at the University of Michigan, Ann Arbor, specializing in Robotics and Autonomous Vehicles. I completed my undergraduate degree in Mechanical Engineering at Walchand Institute of Technology (WIT), Solapur University, India.

My interests lie in exploring how learning-based methods particularly in computer vision, deep learning, and language models can bridge the gap between perception, SLAM, navigation and controls to help robots and autonomous systems better understand and interact with complex environments.

I’ve experience with scene understanding, semantic mapping, 3D reconstruction, and uncertainty modeling. In addition, I've worked on projects involving state estimation, motion planning and navigation, and optimization techniques for autonomous systems. Currently, I am involved in research at the Computational Autonomy and Robotics Laboratory (CURLY Lab) of Professor Maani Ghaffari, where my work focuses on Temporally Fused Scene Completion.

I am currently looking for opportunities in roles focused on Perception, SLAM, Motion Planning and Controls for Robotics/Autonomous Vehicles.

Experience

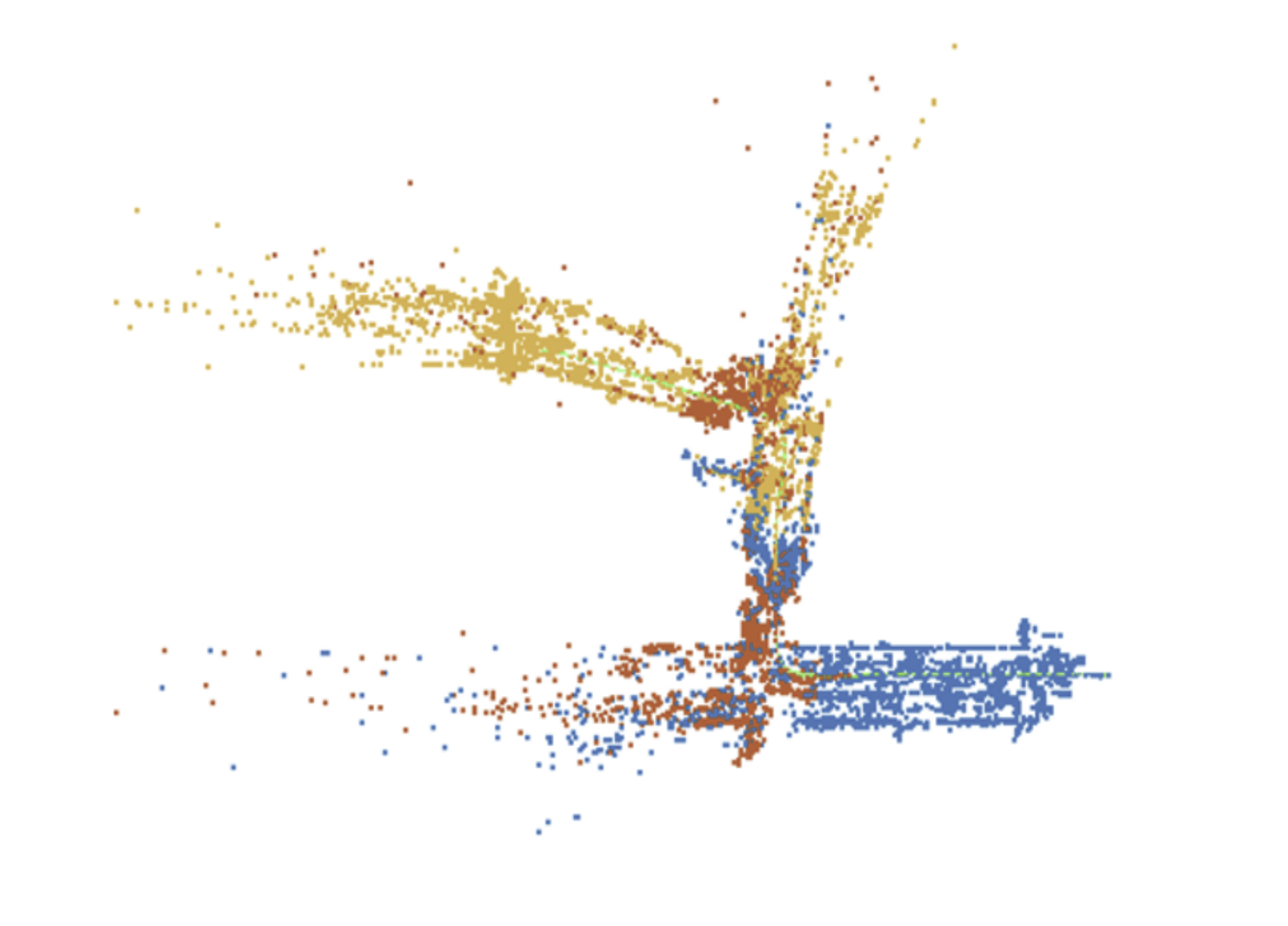

BEV‑Temporal Fusion based Scene Completion on LiDAR data

Research Assistant, CURLY Lab (May 2024 –Present )

Integrated FuseNet LSTM/UNet architectures into LMSCNet for BEV‑based temporal fusion. Developed local 3D mapping with a voxel‑based sliding‑window approach for dynamic scene completion and incorporated uncertainty and confidence into the model.

Dynamic 3D Reconstruction with Gaussian Splatting and Scene Flow Estimation

Robotics Intern, ROAHM Lab (May 2024 –August 2024 )

Developed a dynamic Gazebo simulation with multi-view cameras and 3D LiDAR, visualized in ROS2 and RViz. Performed 3D/4D Gaussian splatting for warehouse reconstruction, optimized sensor placement, and integrated rigid scene flow for dynamic consistency.

Digital Twin Creation using Gaussian Splatting and Multi-View Capture

Reserch Cohort, Capoom, Techlab at Mcity (Jan 2024 –August 2024)

Designed a parallel‑capture pipeline with eight calibrated Lucid cameras on a Mach‑E, synchronizing 30 Hz frames. Leveraged GPU‑accelerated Gaussian Splatting to reconstruct a centimeter‑accurate 3D digital twin, slashing build time 4× versus COLMAP baselines.

Projects

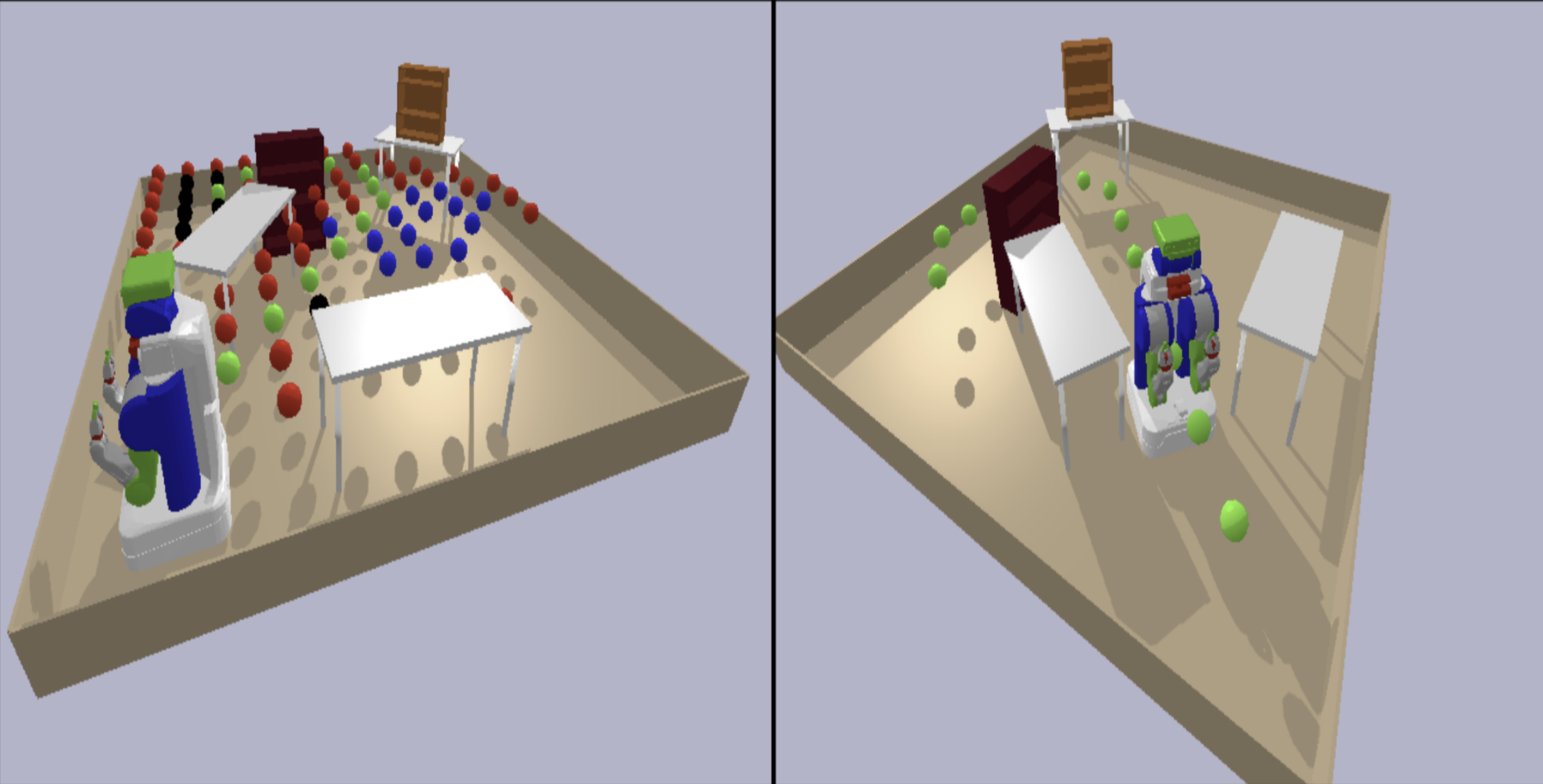

Reinforcement Learning Based Adaptive Grasping

Developed and trained distinct RL-based policies for ground, side, and unoccluded grasps in MuJoCo, achieving a 100% simulated success rate across all occlusion types. Evaluated two policy selection strategies—box-size heuristics and Q-maximization—demonstrating robust grasp execution under partial visibility conditions.

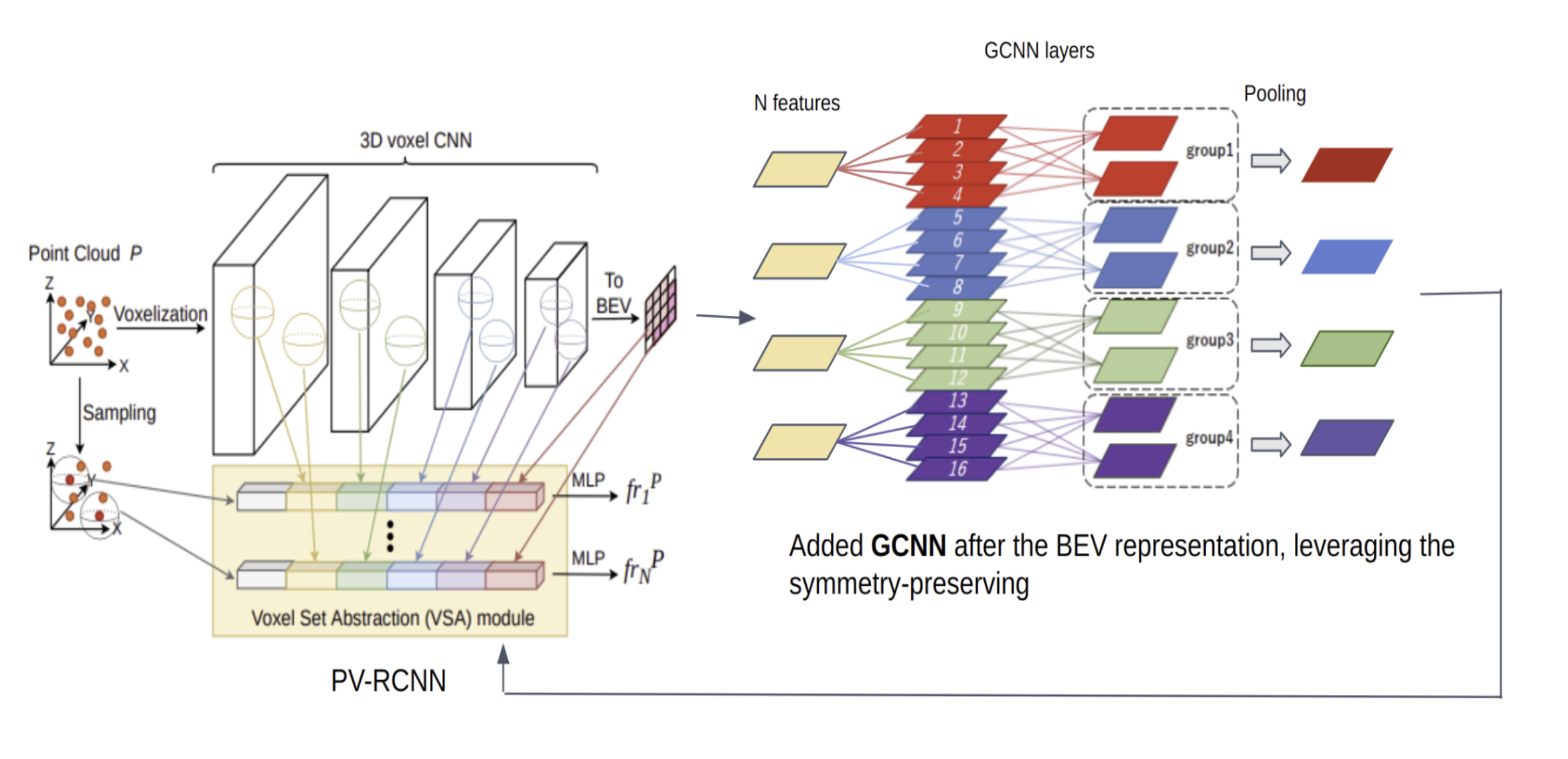

GCNN-based Deep Loop Closure Detection for SLAM

Extended the LCDNet architecture by integrating Cyclic Group Convolutional Neural Networks (GCNNs) to enable rotation-invariant loop closure detection. Evaluated on symmetric scenes in KITTI-360, the method improved translational and rotational accuracy.

Anytime Path Planning for Time-Critical Robot Navigation Using ANA*

Implemented and compared A* and ANA* algorithms for 8-connected grid-based robotic navigation with orientation and obstacle handling. Demonstrated that ANA* rapidly generates feasible paths and refines them over time, making it ideal for real-time, time-constrained applications such as doorway traversal with PR2.

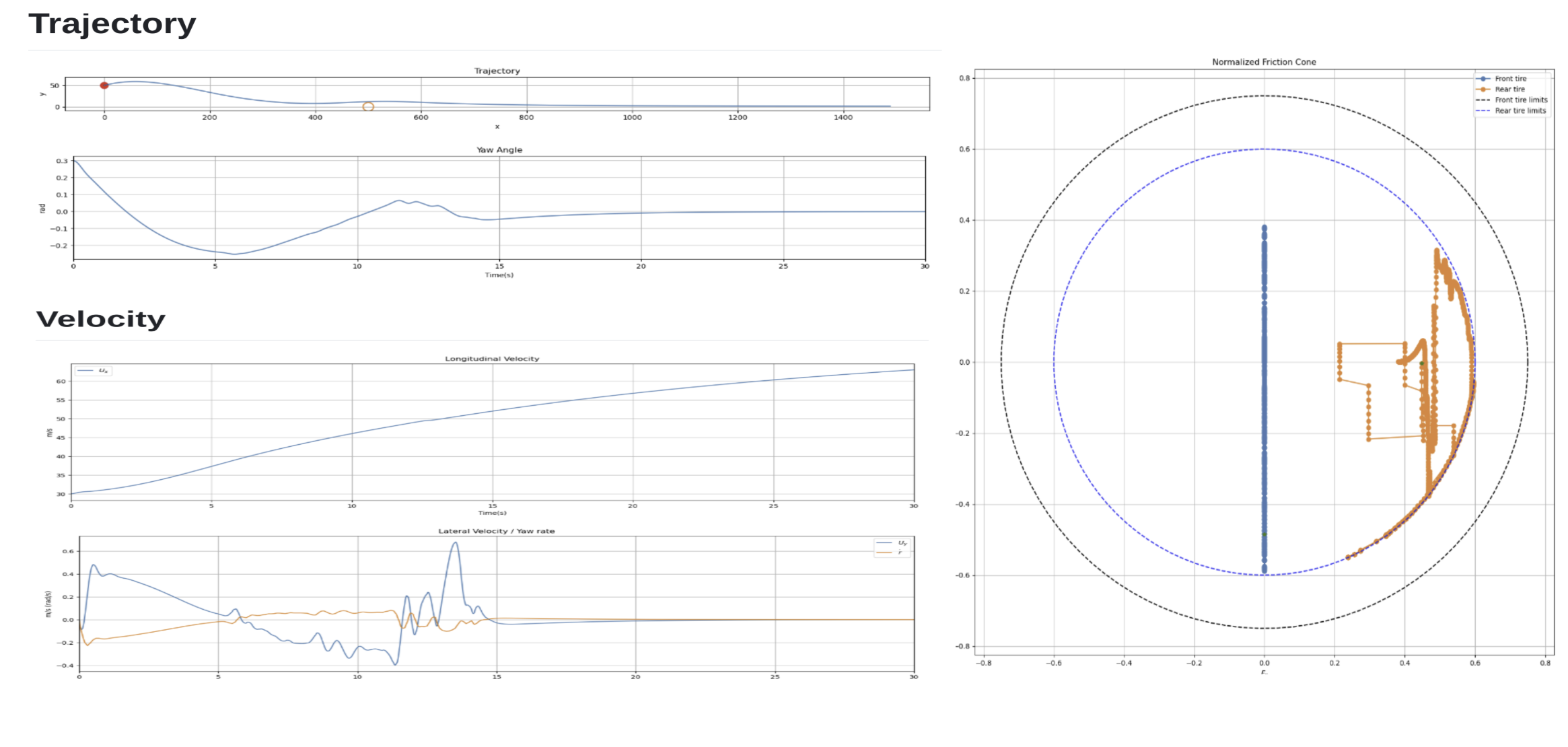

Constrained LQR and CMPC for Trajectory Tracking

Implemented a Linear Quadratic Regulator (LQR) and Constrained Model Predictive Control (CMPC) for vehicle trajectory tracking, using Jacobian-based linearization of nonlinear dynamics. Designed cost functions and constraint matrices to enforce system evolution, input bounds, and deviation minimization from a reference trajectory across a prediction horizon.

PoseCNN: Object Pose Estimation

Implemented PoseCNN using a VGG16-based backbone to estimate 6-DOF object poses with segmentation, translation, and rotation outputs. Integrated RoI pooling, quaternion regression, and a Hough Voting layer to achieve pose accuracy within 5 degrees.

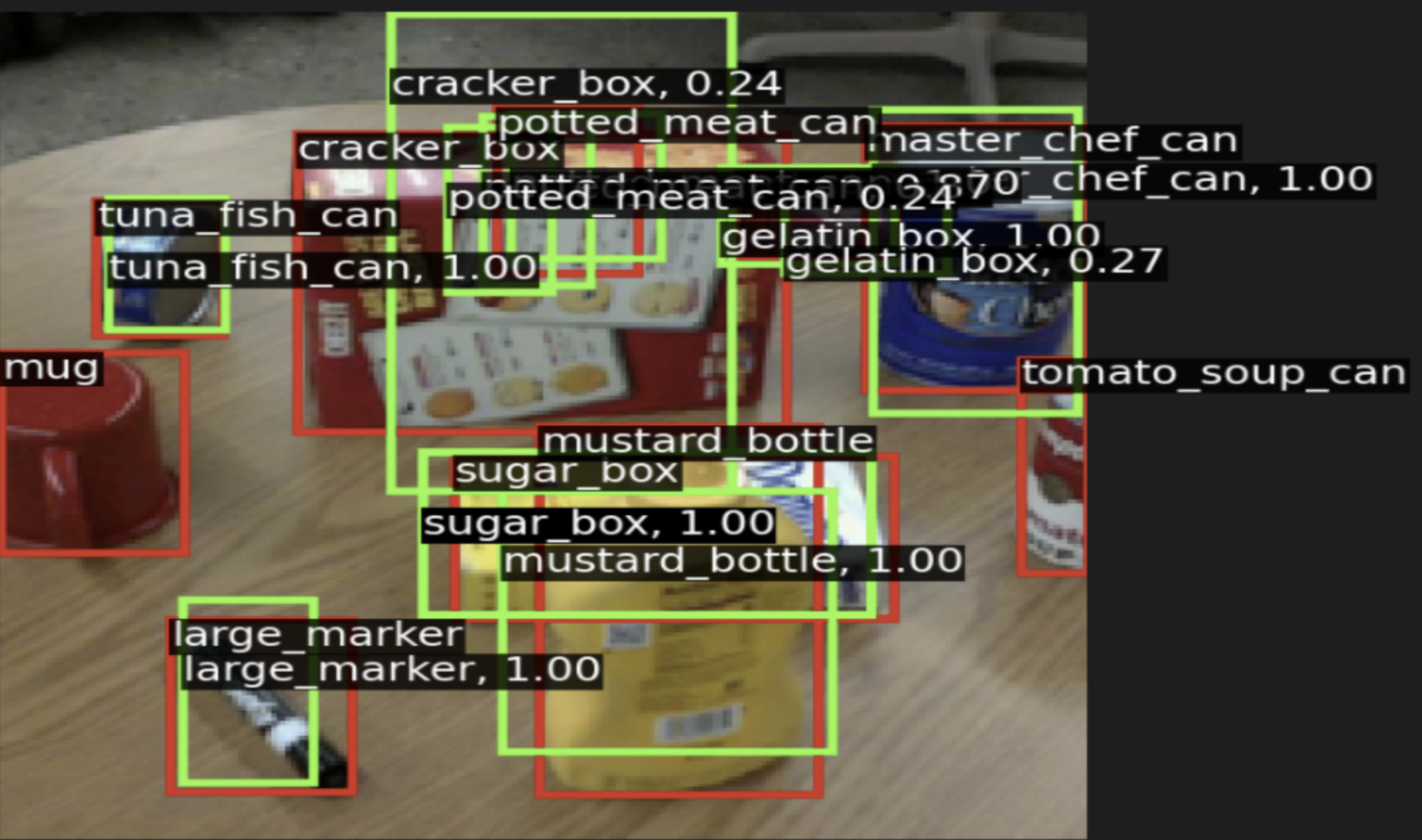

Faster R-CNN: Two-Stage Object Detection

Built a two-stage object detector from the ground up, implementing a Region Proposal Network (RPN) for generating candidate regions and a RoI head for classification and box refinement. Achieved 25% mAP at 70% IoU, demonstrating effective localization and recognition capabilities.

Robustness Analysis of Centralized Multi-Robot SLAM

Extended the JORB-SLAM framework to support three autonomous agents and evaluated its resilience to data loss using Chamfer and Hausdorff distances on the KITTI dataset. Developed a systematic seeded-error injection mechanism to simulate real-world SLAM failures and benchmark robustness across ORB-SLAM2, JORB-SLAM, and Collaborative ORB-SLAM2 under increasing noise levels.

Education